Engineering Troubleshooting 101: Possibility Spaces and Proxies

Divide and conquer your assumptions.

In games, and indeed in all engineering, you will come across problems that baffle you at first glance. If you’ve never encountered a problem and just thought ‘damn I have no idea’, you’re lying or you’re simply too junior to have ever encountered anything really fucked.

Earlier this week, Network Engineering Influencer “TracketPacer” (presumably a Spoonerism of the Cisco network simulation tool “Packet Tracer”) posted a video of a network hub looped back on itself. Confusingly, this did not create a broadcast storm, as she’d hoped.

For those of you not familiar with the dark arts of Network Engineering that underpin our entire online infrastructure, looping Ethernet switches or hubs usually creates a self-sustaining broadcast storm that very quickly consumes all available bandwidth. There are protocols designed to prevent this, but they’re usually only available on higher end “managed” switches. Her basic, unmanaged Netgear hubs didn’t have anything like that. They were arguably some of the dumbest network devices in existence.

And yet, when she fired up Wireshark (a network capture program) to look at the network traffic and sent some pings out, she could only see ARP requests going out for the ping attempt, and nothing else. No storm, no flood, no nothing. So what gives?

It’s tempting to just dive in and start screwing around, but we’re professionals here, and we’re trying to be better professionals, so here’s a technique I like to call “Possibility Space Division”.

Possibility Spaces

A possibility space is a potential scenario that applies rules, limitations, or otherwise partitions off potential root causes of an issue into forking decision trees (n-way, or binary trees for the programmers) for evaluation and elimination.

What this means in practice is generally asking questions in the shape of “Either X is true or it is false”. Dividing our problems like this serves two purposes:

To eliminate entire segments of potential causes at once

To help us track what potential problems we have eliminated

Both of these serve a single goal: to help us drill down and identify the root cause as quickly as possible.

Note on the OSI model: IP addresses/routers exist at Layer 3, and switches/hubs exist at Layer 2. These layers stack on top of each other to provide end-to-end connectivity between devices on networks and the Internet. When we refer to “Layer 2” we are referring to switch/hub networks, and when we refer to “Layer 3” we are referring to routing between IP addresses.

So, let’s evaluate what we think we know about this problem. We have three pieces of information.

There is a looped layer 2 network

Looped layer 2 networks, absent of an antiloop protocol, create broadcast storms

Wireshark didn’t show a broadcast storm

You’ll notice that I didn’t say “there wasn’t a broadcast storm”. This is because of a second layer of troubleshooting that you need to be aware of as an engineer - that everything we do, trust, and rely on, is based on assumptions and proxies. We have to verify those assumptions. Our user story was “Broadcast storm should happen but doesn’t”. But do we actually know that it didn’t happen? Not yet. All we really know is that Wireshark didn’t show one.

So we have two possibility spaces:

Either there wasn’t a broadcast storm, or;

There was a broadcast storm and Wireshark wasn’t displaying it

We now have the root node of a binary tree of troubleshooting, and we get to choose which assumption we want to validate first, to determine which one we need to investigate. But which one should we investigate first?

That choice is simple: use Occam’s razor, and investigate the possibility with the fewest assumptions. Anything involving a PC is orders of magnitude more complicated than a network device, let alone one of the dumbest network devices on the planet. And yeah, there’s some low hanging fruit on Wireshark, but there are a million thing that could go wrong there. Driver error, usbpcap failure, OS failures, misconfigurations, promiscuous mode not enabled, all sorts of stuff. But the network is simple and these hubs are simple so we should look at the hubs because either we will find our answer quickly or we will eliminate them quickly.

We Looked At The Hubs

As it turns out, an enterprising user in the replies looked up the manual for the hub in question and found what the colors of the lights mean for it. There was a yellow light on the port, which if you consult the user manual for the device, indicate the port has been partitioned (separated for excessive collisions etc), and thus was not forwarding. So a storm had started, immediately created collisions, and been shut down by partitioning. Did you know that was a thing? Who among us has used a hub recently? Did anyone recognize the yellow light or remember that port partitioning existed, or that collisions would need to be handled? I didn’t, but if you check the manual, it’s right there.

In the brief few seconds we saw the network devices, we could see two things.

The ports themselves weren’t blinking with a lot of traffic

The ports had a secondary yellow light

Now, you could write the not-blinking off as ‘the storm hasn’t started yet’, which might be reasonable in the video because they usually take a little bit to get going, but ultimately “port is partitioned” explained everything, and we never had to dig into the complex option of “what if Wireshark is lying to me?”

When dealing with possibility space division, simplicity is your friend, and speed of fork elimination is your other friend. This goes double during an outage.

Why Does This Matter?

Quite frankly, it matters because some of you are very, very bad at troubleshooting.

One time in recent history, a Computer Science Fundamentals course instructor I came across was having trouble with his web server. He was Not A Web Guy and got stuck quickly. He should have realized very quickly where his problem was, because all the information he needed was available, and he was continuing to attempt to re-check things that he had already validated. He was not respecting the fork, he wasn’t tracking what he’d validated, and so he wasted an entire day chasing the problem.

If you know anything about the internet, you know that a working website depends on a handful of things.

A domain name pointing to an IP address

IP traffic being able to be routed to that IP address

Clients being able to look up that domain name and resolve the IP address

Clients being able to craft a HTTP request, and send it to that IP address

Those requests may need to be routed to the IP (load balancer, application layer firewall, etc)

A process on a server somewhere listening on a port must receive that request.

That request needs to be processed by the service process and then Stuff Happens to look up what the contents of the web page must be and return it

Our instructor could ping the server by its DNS name, via a command like ping mycompscicourse.com and get responses back. This proves without a doubt two things:

DNS is working. It was successfully resolving the IP address of the domain name.

Layer 3 (IP address) is working. Ping was successfully arriving on that IP and it was replying.

At this stage, he should have been looking further down the stack of things that need to happen. The correct next step is to try to connect to the server by DNS name on the appropriate port and see if it worked.

If it did, then we can move further down and investigate the server process.

If it didn’t, then we need to validate that HTTP requests can reach the server.

However, he didn’t attempt to validate those. He wasn’t scientific about it, and didn’t bother to think through that he had actually validated DNS and IP already. This man wasted hours messing around with DNS configurations and all sorts of other things, but his initial testing had already validated DNS. He was re-investigating things that should have been cleared.

If it’s this easy for an instructor to fuck up, you better believe we’re all capable of it if we’re not tracking the depth and forking of our troubleshooting, or the assumptions that we’re making.

Your Tools Can Lie

The other reason that this is important is that there is a moment some time right before you become a senior where you realize that all your evidence for anything is based on assumptions and we all stand on the shoulders of giants.

When I was a Network Engineer, I got called out at 3am for a network that was down. I logged into the router and there was no evidence it was actually down except for the fact that it was definitely down. Every single tool reported that it should have been working. The one thing that stood out was that the CPU was kind of high, but the CPU on that router was kind of shitty so that wasn’t abnormal.

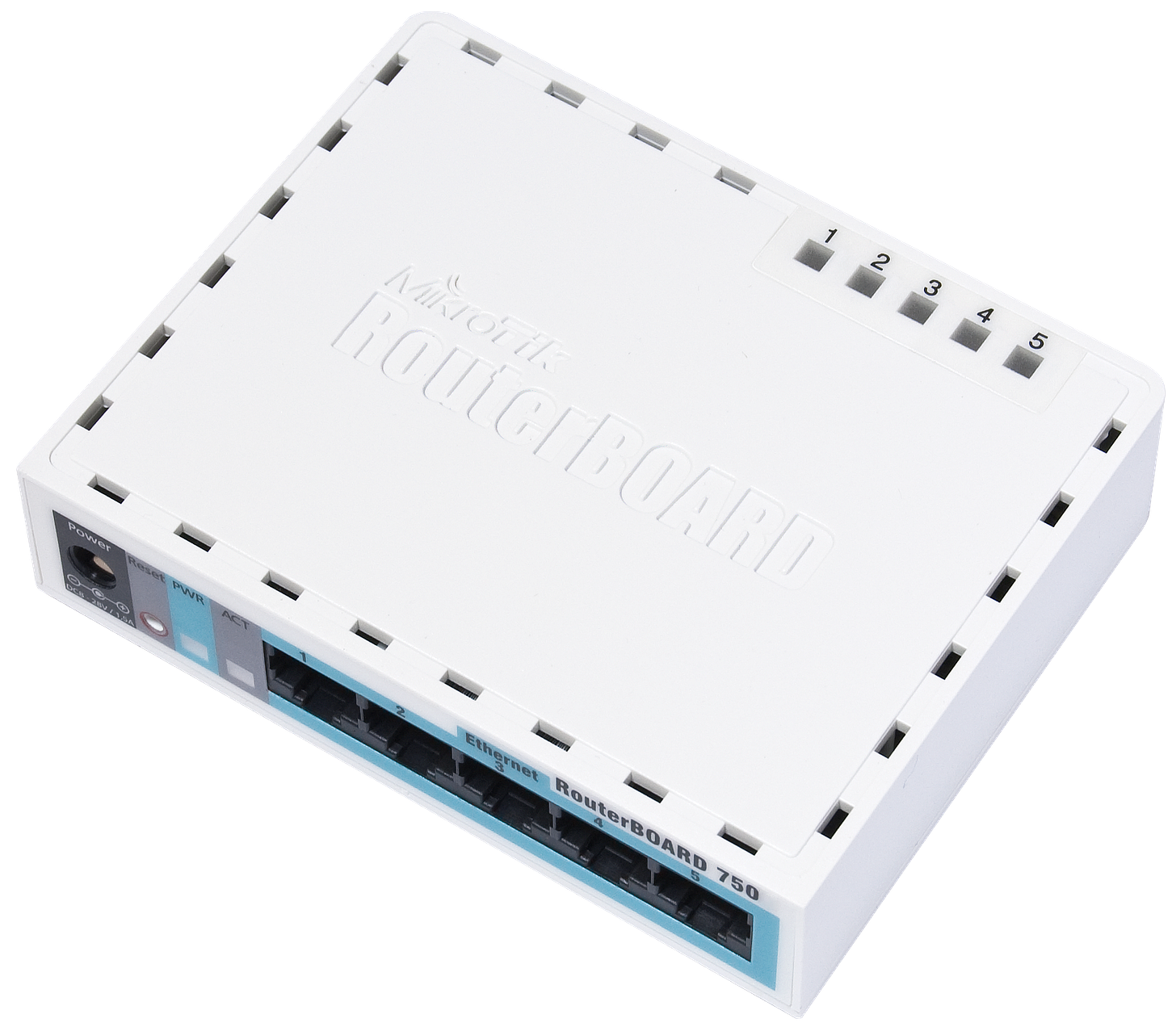

So, I began eliminating things and validating my assumptions. The routing table correctly showed that the internet was reachable out the Internet port and my customer was available out of the customer port. But no matter what I did, I couldn’t get ping responses through it. I wanted to reboot it, but it was carrying critical traffic for another customer and the provider said no. Fortunately, I carried a tool for these situations - a tiny but full-featured ethernet router, a Mikrotik RB750GL.

I configured it as a packet sniffer to aggregate the inbound and outbound traffic into a single port and send them to my laptop where the awaiting Wireshark would be able to show me what was really happening. I set up another laptop nearby and started generating traffic so I could 100% confirm that traffic I was sending was traversing the router and coming out the other side.

Friends. It was not coming out the other side. It went out a different interface entirely. I sat baffled by a router that clearly said route X was going out interface A, but I could see it coming out interface B. So, first a few facts:

The customer’s network could definitely not reach the Internet or vice versa

The router’s software reported correct routes for both customer and Internet

Packets traversing the router did not follow those routes

So, back to the possibility spaces. Either:

The router is telling the truth

Or it isn’t, and it needs to be rebooted or replaced

Possibility space 2 is bad, interruptive, and at worst case, expensive, so let’s start with possibility space 1. “We know the routing table is wrong” is low hanging fruit, but we need to be precise with language here.

What we actually know, is that the output of “show ip route” doesn’t match the observed flow of packets. How could that happen? Where does “show ip route” gets information from, and how do packets actually traverse the router?

There lay the answer. This was a hardware accelerated router. Although it relied on its CPU to learn about routes and configuration, the actual packet forwarding happened in hardware. The process went something like this:

A route is learned or configured in software

The routing table (RIB) is populated with that entry and a destination

The in-hardware forwarding table (FIB) that actually passes the packets around receives messages about RIB updates that tell it how to configure the actual forwarding

So I queried the FIB, and sure enough, there were the entries that were punting all our client’s packets out the wrong interface. But then something jumped out at me - a single line in the output that said something like:

Queued updates: 51,239

I hit the command again and the number decreased slightly. Why would there be 50k+ queued updates? I went back to the logs again and found the source of all our woes - the internet link had been flapping furiously over the night. Up, down, up, down. Someone had likely disturbed a cable, so it would start and stop working over and over again. Problem was though, this was a BGP connection - it worked by sending the router instructions for how to reach every single network on the internet. At the time, the full global routing table was around 500,000 prefixes (destinations). Because of the magnitude of this kind of change, usually connections like this have some configuration on them to stop flapping from affecting them. This one didn’t. So when the internet connection started going up and down over and over, our poor little router was receiving an entire internet’s worth of destinations, then being told “oops no destinations, delete everything I just sent you”, then milliseconds later, an entire Internet’s worth of update again. This happened ~100,000 times, leaving our poor, tiny, underpowered router CPU with a queue of fifty billion route updates to process.

A few more refreshes and we could see the queue decreasing enough that napkin math said it would fix itself in 4 hours. I hacked up a workaround using the Mikrotik router, then came back in the afternoon to pick it up when the queue had emptied, and all was fine.

When I observed the RIB, I wasn’t looking at where the packets were going. I was looking at where the software intended them to go. It wasn’t until I looked at the FIB that I could see where the packets were actually going. Now most of the time, the RIB and FIB are in sync and that’s not an issue, so looking at the RIB as a proxy for the FIB is usually fine. Today it wasn’t. Every tool is querying something to get its information, and that query works in a particular way. Many of those ways can be broken, and choosing to ignore the ways in which they can break are the assumptions that we make our decisions on.

What Did We Learn?

Lesson 1: By separating the possible failure conditions into binary tree spaces we can prune branches of troubleshooting and save a lot of time.

Lesson 2: Understand the difference between information and a proxy for information.

My first thought was that the command “show ip route” lied to me. But it didn’t, not really. Through force of habit (and the fact that it’s usually true) I expected the output of the RIB to match how packets were forwarded, but that was an assumption on my part. It’s one that’s usually correct, but that day it wasn’t, and if I hadn’t been able to identify and interrogate that assumption, I would have never solved the issue.

I believed “show ip route” was showing me the way that packets would flow through the router, but it was a proxy for that - it sat upstream of the thing that actually did the forwarding.

Best of luck in your troubleshooting.

// for those we have lost

// for those we can yet save