NFT Fantasy 3: Why None Of The NFT Plans Even Make Logical Sense

You can't fix a bad plan with more code.

You Can’t Fix A Bad Plan With More Code

Welcome back to Load-bearing Tomato.

This final part addresses the logic that underpins inter-system communication.

Now that we’ve established that NFTs cannot take items between games, and that NFTs don’t enable knowing what you own in other games, it’s time to get down to the most important thing:

The plans for NFTs in games do not make logical sense at a fundamental level.

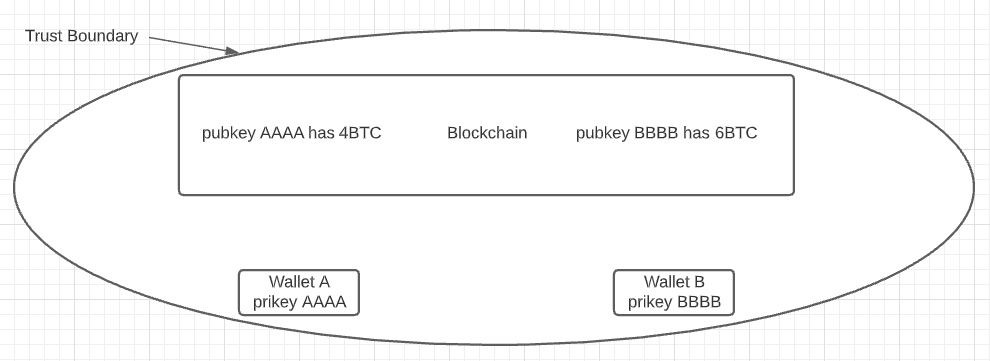

Blockchains, at their core, are vehicles for establishing trust in an untrusted system. They are an immutable record of transactions that can be mathematically proven to have happened.

And that’s great for things like cryptocurrencies, where everything the blockchain knows about exists entirely within that system.

Wallet A has 5 BTC, Wallet B has 5 BTC.

Wallet A transfers 1 BTC to Wallet B.

Wallet A now has 4 BTC, Wallet B has 6 BTC.

The Trust Boundary

The system is self-contained — it doesn’t care about anything but the state of the wallets and transactions it contains. For this, blockchains do a great job of establishing trust. In order to formally evaluate trust, we use what's called a Trust Boundary: a line you draw between a system you trust, and one that you don't.

This is where systems like NFTs based on cryptocurrencies fall over dramatically. A cryptocurrency describes only itself. The second that the thing you are describing is off the blockchain (off-chain), you are no longer trusting what happened, you are trusting what someone said happened. Anything that occurs off-chain crosses the trust boundary, and thus, can't be trusted.

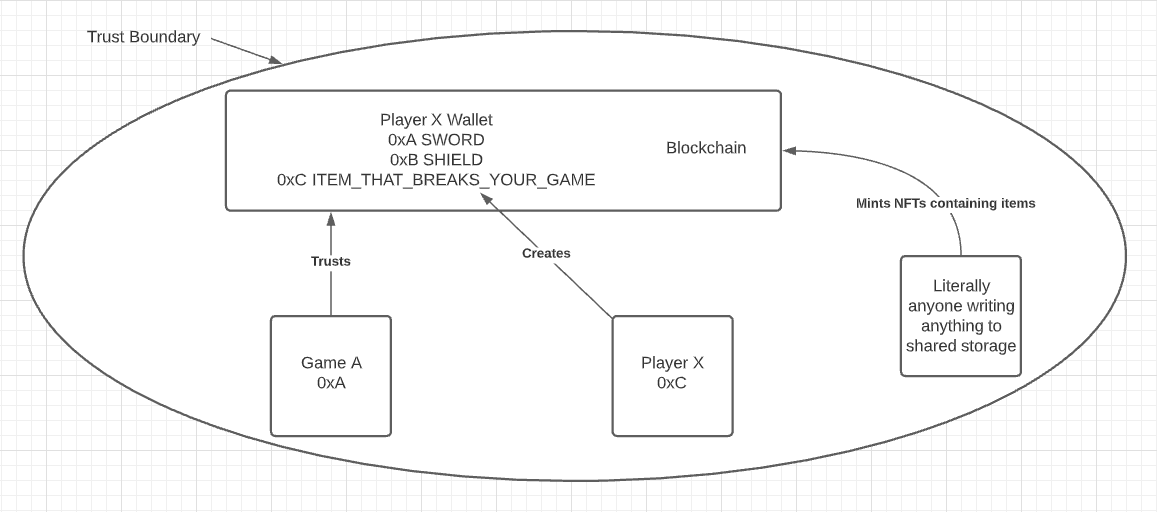

The lie of ‘items/skins as NFTs’ relies on a server that reports that a user gained an item. In this world of non-self-containment, we can’t think of a blockchain as something someone can trust, because anyone can write to it.

Instead, we need to think of it more like shared storage, like the hard drive of a shared computer. Remember all those warnings of the websites you log into that warn you ‘if this is a shared computer, don’t save your password’? There’s a reason for that. Now imagine your computer is in a public library, and anyone can save files on it, but like a blockchain, no-one can edit them after they’ve been saved. How do you know which files are safe to open? How do you know which files contain information you need? How can you trust any file that you didn’t create?

So, if you think about the ‘items and skins as NFTs’ idea, where do you draw the trust boundary?

Let’s think it through.

Who Do You Trust?

Anyone can mint an NFT of a Bored Ape JPG, but it’s not a real Bored Ape NFT unless it was minted by the BoredApeYachtClub, from contract address 0xaba7161a7fb69c88e16ed9f455ce62b791ee4d03. The Bored Ape site won’t list it, and OpenSea won’t show it under the Bored Ape contract.

Let’s put this back in the context of some games: Game A and Game B. You have the following system — two games, both storing items as NFTs on a blockchain. We’ve already established that these NFTs can only be item codes, and will not contain assets.

Game A has the items SWORD and BIG_HAT. Game B has the items SHIELD and BIGGER_HAT. They both agree to implement each other’s items.

Where do you draw the trust boundary?

Game A supports SWORD, SHIELD, BIG_HAT, and BIGGER_HAT.

Game B supports SWORD, SHIELD, BIG_HAT, and BIGGER_HAT.

But clearly this is insufficient. What’s important is not just the item, but the contract/game that minted it. If Game B plans to use an item from Game A, they can only trust that item if it was minted by Game A.

There’s an obvious reason why.

Fake Mints

If Game A decides that all items are equally trustworthy — regardless of which contract minted it — anyone can add anything to their inventory. They have opened the doors to fake items or worse. Let’s see that vulnerability in action.

Player X mints an NFT with an item BIG_HAT and adds it to their wallet.

Game A obviously should not trust this item, even though it supports BIG_HAT. That’s because it’s not BIG_HAT that is trustworthy, it’s BIG_HAT by 0xA.

The Actual Vulnerability Is Name Collision

That’s a malicious example, but the same thing can occur completely naturally.

What happens when Game B — a trusted contract — adds an item that also uses the code BIG_HAT?

Game A supports SWORD, SHIELD, BIG_HAT, and BIGGER_HAT.

Game B supports SWORD, SHIELD, BIG_HAT, and BIGGER_HAT.

Player X has BIG_HAT. But which BIG_HAT is it? In order to avoid confusion, each game needs to maintain a list that includes information about both the items it trusts and the game/contract that minted them.

Game A and Game B now have a list that looks like this:

0xA SWORD

0xA BIG_HAT

0xB BIG_HAT

0xB SHIELD

0xB BIGGER_HAT

“But Christina”, you ask, “you talked about using UUIDs to generate unique ids. Couldn’t you just use those, and then each game could store a mapping of UUIDs to in-game items?”

Good question! But even if you avoid an accidental collision this way, it doesn’t protect you from someone maliciously and intentionally minting an item with a “unique” string. And because NFT contents are public, anyone can inspect or clone them.

This isn’t a technological problem — it’s a logical problem that arises from using shared storage. You can’t trust everything on the blockchain, you can only trust things on the blockchain that come from trusted sources.

This logic error underpins every failure of blockchain to deliver the promised metaverse of interoperable items. There is no way around it, because untrusted shared storage is a bad plan. The “trust” that blockchain delivers does not ensure that the data is trustworthy. It only ensures that we have a record of what was posted, and by who. Whether or not we trust that data is entirely up to us.

That is the Trust Mesh Problem. And it cannot be solved. It can only be managed through choices that we, as game operators, make about who we trust.

So Who Do We Trust?

We already discussed that Game A’s developers need to do a lot of work to implement Game B’s items by hand, and that they'd need to do this all over again for every game they use items from.

So naturally, we trust those items from those sources.

Earlier, I asked you where you thought the trust boundary was. What was your answer?

Here’s what it actually is. As Game A, we don’t trust the blockchain. We don’t even trust the contents of our players’ wallets. We trust our servers, we trust items that we minted ourselves, and we trust items that were minted by specific contracts that we choose.

This reveals the real truth: Since what we actually trust is Game B’s contract, by extension, we also trust Game B. We trust the contract code, the server code that executes contracts, and we trust anything that they create or execute.

A game’s Trust Mesh is the network of external systems that it decides are a source of truth. Here’s our trust mesh in the Game A/Game B scenario.

The Trust Mesh

So we (Game A) trust Game B. But by trusting their inventory, we are also trusting their drop rates, their economy, their methodologies — everything that goes into the game that we choose to support.

This means that Game B can fuck us in many different ways. Not only can they fuck our game balance — by flooding our game with super-powerful items, for instance — but they can fuck us economically. If Game A chooses to support Game B's skins, and those skins are cheaper than Game A’s skins, that could decimate Game A's skin revenue. And Game A can't even sell Game B's skins to make up for it, because they can’t mint them. Like we already established, Game A and any other game can only trust Game B items that came from Game B's contract.

And there’s nothing you can do about it, because it’s a logic problem. If you allow Game A to mint and sell Game B’s items, any game participating in this item-sharing system now has to maintain a list of all valid sources for Game B items, or else Game A’s Game B items will be incorrectly be identified as fakes. This also means that any time a new game joins the sharing system, every participant in the system has to update that list in their game. An incremental change would then require exponential work to implement, which destroys the idea of this system scaling, and Game B just gave Game A another way to fuck them. Before, Game B could just make skins that might be more popular than Game A. Now, Game A would be able to literally sell Game B’s content, but cheaper.

This scenario also reintroduces name collisions, because now the combination of contract ID and item code are not enough to uniquely identify the correct item type. We’re back to requiring globally-unique item codes.

These issues decimate the metaverse promise, because every time you add a new game to the trust mesh, it gets exponentially more complex. In computer science, we refer to this as O(N^2), in what’s called “Big-O” notation. What does this actually look like if we try to do it for seven games instead of two?

The size of a full trust mesh is the number of participants (n) minus 1 (you trust yourself) squared, or (n-1)^2. With 10 games involved, that’s 81 trust relationships. With 100, it’s 9801, all of which must be maintained and kept in sync. And if any participant in that system retains the freedom to choose who it trusts, the number of hypothetical different trust states becomes (2^n), rising from 9801 to 1,267,650,600,228,229,401,496,703,205,376.

Good luck programming for those.

What About User Generated Content?

We’ve talked about the difficulties of assets before. For low-fidelity, objectiveless games like VRChat and Second Life however, there are standards you can use to make and import assets — at the trade-off of performance, fidelity, and freedom. These standards are unsuitable for modern AAA games, but let’s assume that we don’t care how badly our game looks or runs.

GLTF and other similar standards allow importing and using assets, but in order to allow UGC, we need to choose to trust anyone on the blockchain that claims to have an asset for our game. We’ve already discussed why that’s terrible; the problems start all over again, but this time, it’s the content that’s the issue.

Aside from the usual “Time-To-Penis” concerns (the amount of time between enabling UGC and someone using it to draw a dick), and people making things that are just bad (e.g. obscuring hitboxes, breaking silhouetting rules) we have a much larger problem: Shaders.

You might think that a surface in a game is just a texture, but it can actually be much more than that. Shaders are programs that generate the look of a surface. Textures are little more than simple images, but with a shader, you can do things like control how it refracts light, make something glow, or even simulate the liquid contents of bottles in Half-Life: Alyx. And now we’re saying that we’ll let people import shaders and execute them.

If you’re a programmer, you may commence panicking now.

Shaders are basically arbitrary code. In security terms, they are an RCE vulnerability — one of, if not the worst kind of vulnerability you can possibly have. You’re letting someone else run programs inside your game, on your players’ PCs. If your game loads a shader that you didn’t write, it could easily contain code to download child porn, or join a botnet and turn every player’s PC into a DDOS participant, or run an aimbot (it would be so profitable to make skins that contain aimbots in their shaders, I shudder even thinking about it). One guy even wrote a shader that runs an entire Linux instance.

You can never, ever let a client run a shader you don’t trust.

Here’s what our trust mesh looks like with UGC:

If we let users load anything at run time, we are choosing to implicitly trust them. And since we have decided that we are trusting things we don’t control, created by anyone, we arrive back at the core problem:

Trusting shared storage allows anyone to hack your game.

Quality control is gone. Anti-cheat is gone. Game balance is destroyed. The game economy is destroyed. It is all the hell of trying to buy an authentic item on Amazon, times a million.

The Cursed Design Problem

This is what we call a Cursed Design Problem: when you have two goals that are in conflict with each other, and you can’t have both.

Example:

You want to buy a two-seater sports car.

You also want to be able to transport four people.

You can’t have a two-seater car and transport four people. One goal must lose.

The logic error inherent to NFTs in games is a cursed design problem.

If you have user-generated, NFT-loaded skins, you can’t have security, stability, or performance.

If you have items coming in from other games, you can’t have economic integrity.

If you have immutability, you can’t have customer support fix things when you get hacked.

These are not technical problems — they’re logical problems. The design goals are in conflict with each other. They cannot be resolved without sacrificing one of them.

You cannot fix it with more code. A new ERC can’t fix it. A new token can’t fix it. The plan is at odds with itself, because it was never designed for this. It is the wrong tool for the wrong job.

—

This is the end. Hopefully you’ve come to understand why we don’t do this; it doesn’t do what you think it does, we can already achieve the same things using stuff that actually works, the outcome is worse, and the plan does not make sense.

I hope that by writing this, I will never have to explain this to anyone again. That won’t happen, but a girl can hope 🤞

for those we have lost; for those we can yet save