How Sound Works In Games

A game audio primer with Wwise and UE5.

Ponder for a second what sound is: thousands of vibrations in the air, traveling at ~332m/s, bouncing and diffracting off objects and their surfaces, all of which vibrate, absorb, and reflect in different ways, and at frequencies up to 20,000 times per second.

There’s a reason that accurately simulating something like aerodynamics requires supercomputers — reality is complicated. There are so many variables and processes that need to run, and they need to run at such small intervals they can’t run in real time.

Games also need to run simulations, but unlike the simulations that keep our planes in the air, they must run in real time. In order for that to be achievable, we need to optimize reality and make systems that instead simulate a simplified approximation of it.

In order to hold 60fps, each frame must complete in 16.6ms. That’s not a lot of time. We can simulate semi-realistic physics with large objects in that timeframe, and hand a lot of it off to the GPU, but simulating the actual physical reality of sound in a game is impossible to do on desktop hardware in a matter of milliseconds. So instead of simulating it physically, we approximate it.

The Absolute Basics

This primer will use terminology and functions from Unreal Engine 5 and the Wwise sound engine from AudioKinetic.

So how do we simulate sounds?

We start with two basic Actor Components from Wwise:

Emitters, which tell the game world a sound is being played (

AkComponent)Listeners, which receive the sounds being played (

AkAudioListener)

Unreal note: Components attach to an object in the game (their parent) and are used to add similar functionalities to different kinds of Actors. For example: You could create a “health” Component that includes functionality for displaying a health bar, or for destroying Actors when their health reaches zero. You could then attach that to any Actor that you want to be able to take damage.

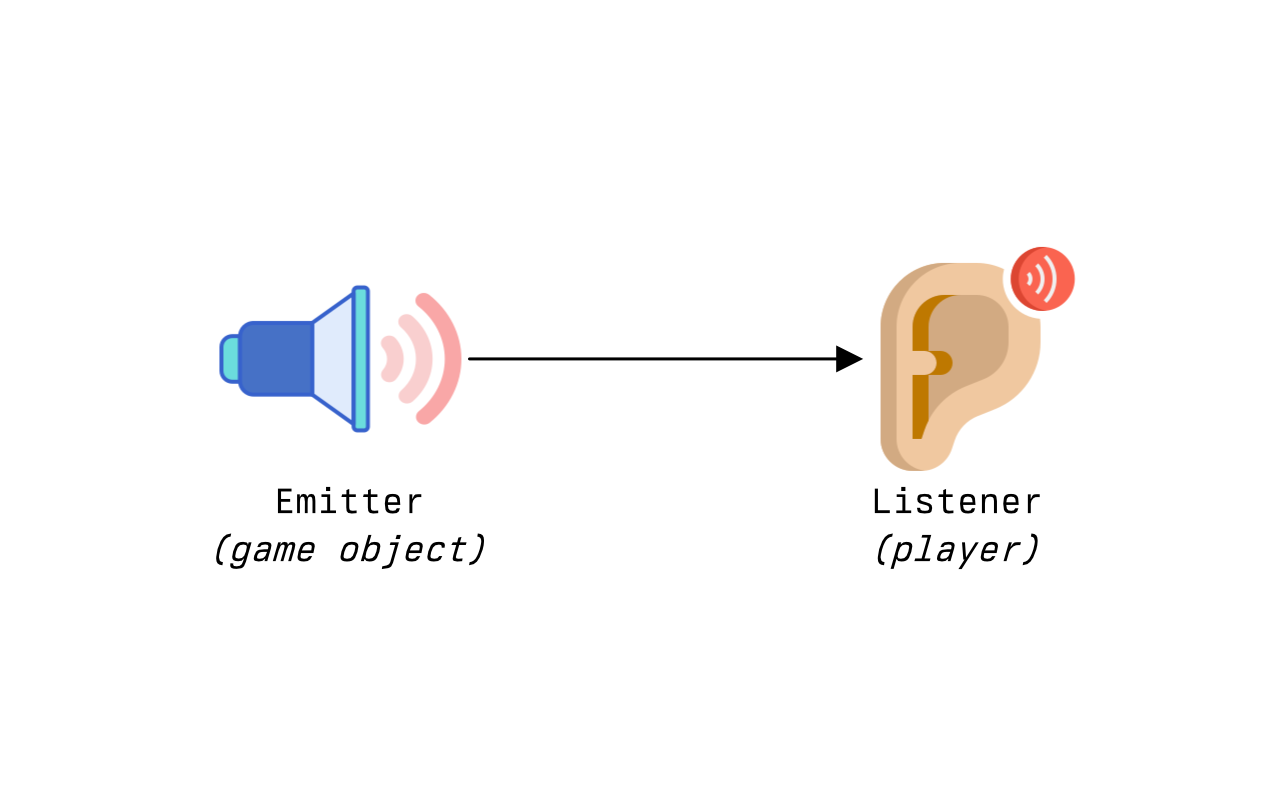

In a very basic setup, you’d have a Listener component on the player character (or camera), and Emitter components on anything that creates a sound. The Emitter components get fed sound files, and the Listener component gets hooked up to the output audio device, so when an Emitter signals that it is playing a sound, the Listener receives that signal and plays the sound on your computer.

But if we stop here, all sounds in the game will be heard at the same maximum volume — as if you’d just hit play on the sound file.

Unreal note: Location information is stored as a Vector 3 (vec3) which is three float values jammed into the same data structure. vec3 is useful for storing location information because it’s the same size as the x, y, and z values for an object. A vec3 can also be used for rotation information, as the same three floats can be used for pitch, yaw, and roll. What’s important to remember is that it is just a container that stores three numbers — it’s up to us to decide what those numbers represent.

Attenuation

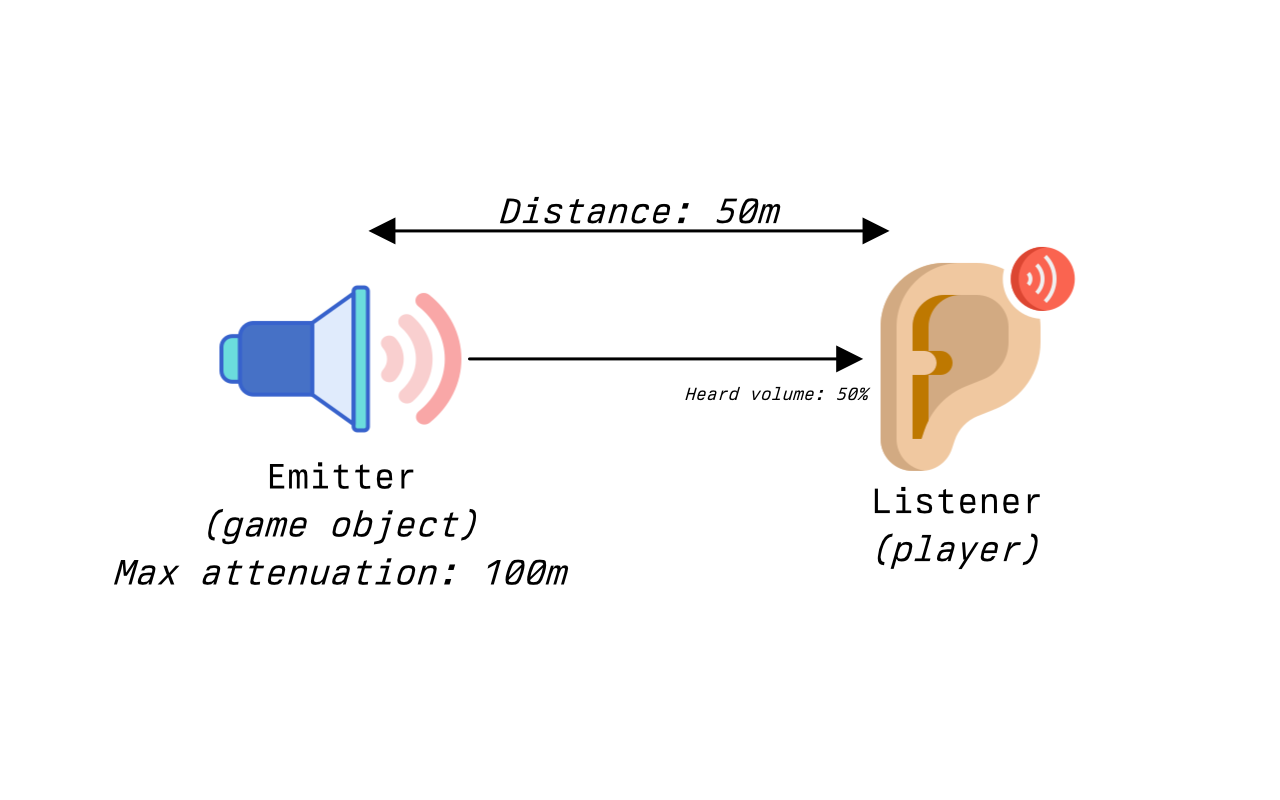

In order to simulate the fact that sounds that happen further away appear quieter to the listener, the game engine feeds Location information for both the Emitter and the Listener into the sound engine. Knowing these two locations lets it figure out how far away they are from one another.

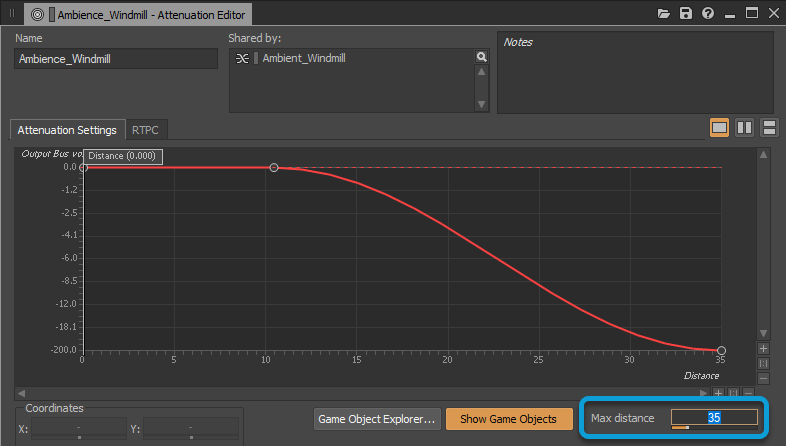

Sounds are physical waves in the air, and those waves decay as they travel. Since we’re not actually simulating the waves in the air, we need a method to approximate how much they tail off between the two locations. The amount that a sound decays between two points is called attenuation, which is basically ‘how much does the volume decrease’. To calculate the decrease, each sound has an attenuation curve, which is a 2D graph mapping Distance to Attenuation. Wwise gets the distance between the Listener and the Emitter, then looks up the attenuation curve — for distance x, it uses the value y to determine how much quieter to make the sound.

The important thing to grasp is that a Listener isn’t receiving an audio stream of the actual sound from an Emitter the way a microphone does. It’s simply being told that the Emitter sound should play to this Listener at a certain volume level, which is dependent on distance.

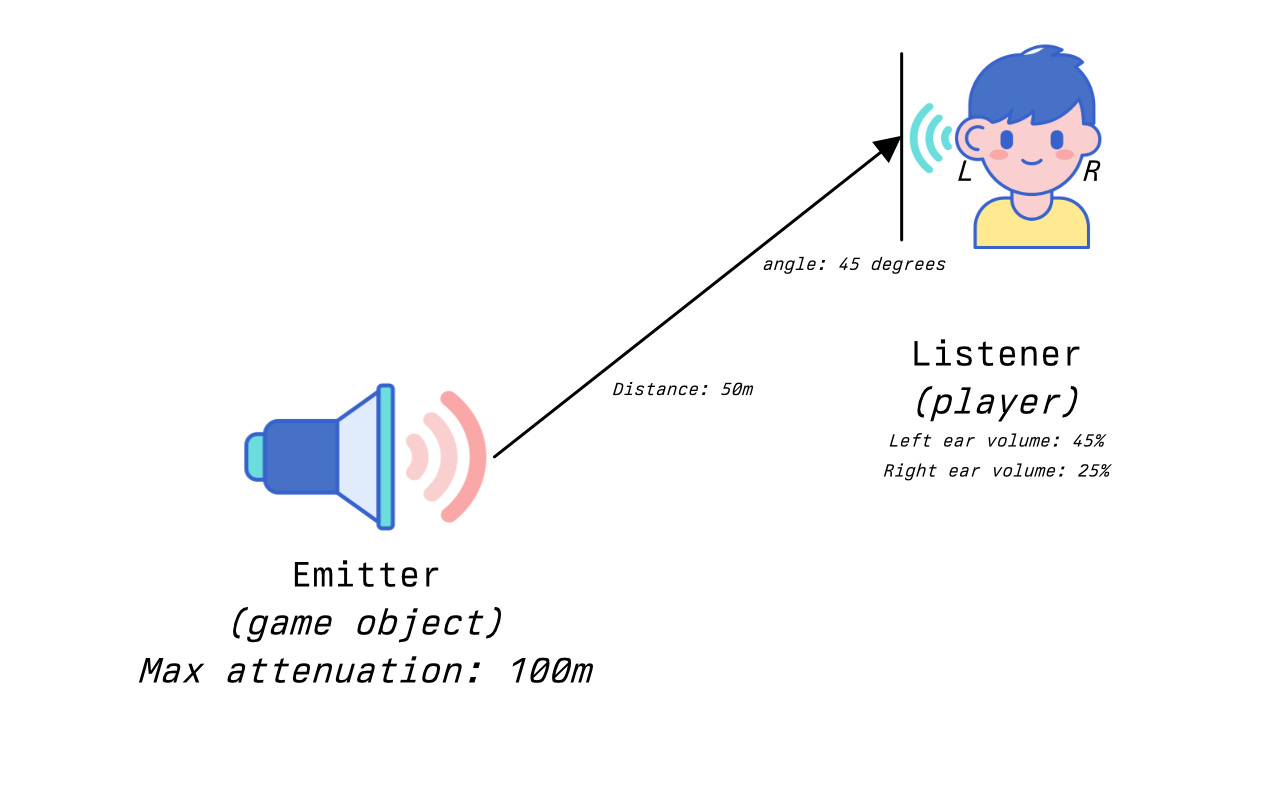

(All numbers in the diagrams that follow are made up for illustration purposes.)

But there’s one more value to calculate to finish the basics.

Balance

If we only use location information, we will hear the sound in both ears the same volume. But a sound playing to our right should be heard more loudly in the right ear than the left, and so we also need Rotation information to calculate how much sound should be played in each ear. By taking the rotation of the camera in 3D space, and calculating the “look at rotation” — how much we’d need to turn to be facing the sound directly — the sound engine can calculate how much to balance the sound between the Left and Right audio channels. This won’t necessarily drop a channel to zero either, as sounds usually make it to both ears — they’re just louder in the ear they’re closest to.

Wwise note: The raw sound file for playback needs metadata to store the attenuation curve, balance, 3D configuration, and other information needed to calculate all this. In Wwise, this object is predictably called a Sound. You can group sounds together in a Bus in the Actor-Mixer hierarchy to apply the same rules to all of them, or selectively override bus settings for individual sounds.

You do not have to use either of these settings. You can make any sound play at the same volume or balance regardless of distance or direction (e.g. helmet radio comms) by disabling attenuation and 3D position/balance.

Remember, this is all just calculation. The sum total of playing sounds is only mixed down to an actual output waveform when it’s finally played by a Listener.

So that’s the basics of simple 3D sound setup - the locations become attenuation, and the rotation becomes balance. Put them together, and your player can hear sounds relative to their position from you. It’s a great start! But it’s still only the beginning. If you want to make your sound immersive, you need to start simulating how sound travels through the environment.

The Environment

If you just recoiled in horror thinking about how to simulate sound in a complex physical environment without actually simulating the sound waves… good. It is very, very complicated. We need to:

Mimic the behaviors of the real world while:

Making sure the right sounds can be heard, and;

Processing sounds based on the game world to ensure realism.

Optimize away anything we can cull, simplify, or eliminate, because step 1 can be CPU and RAM intensive.

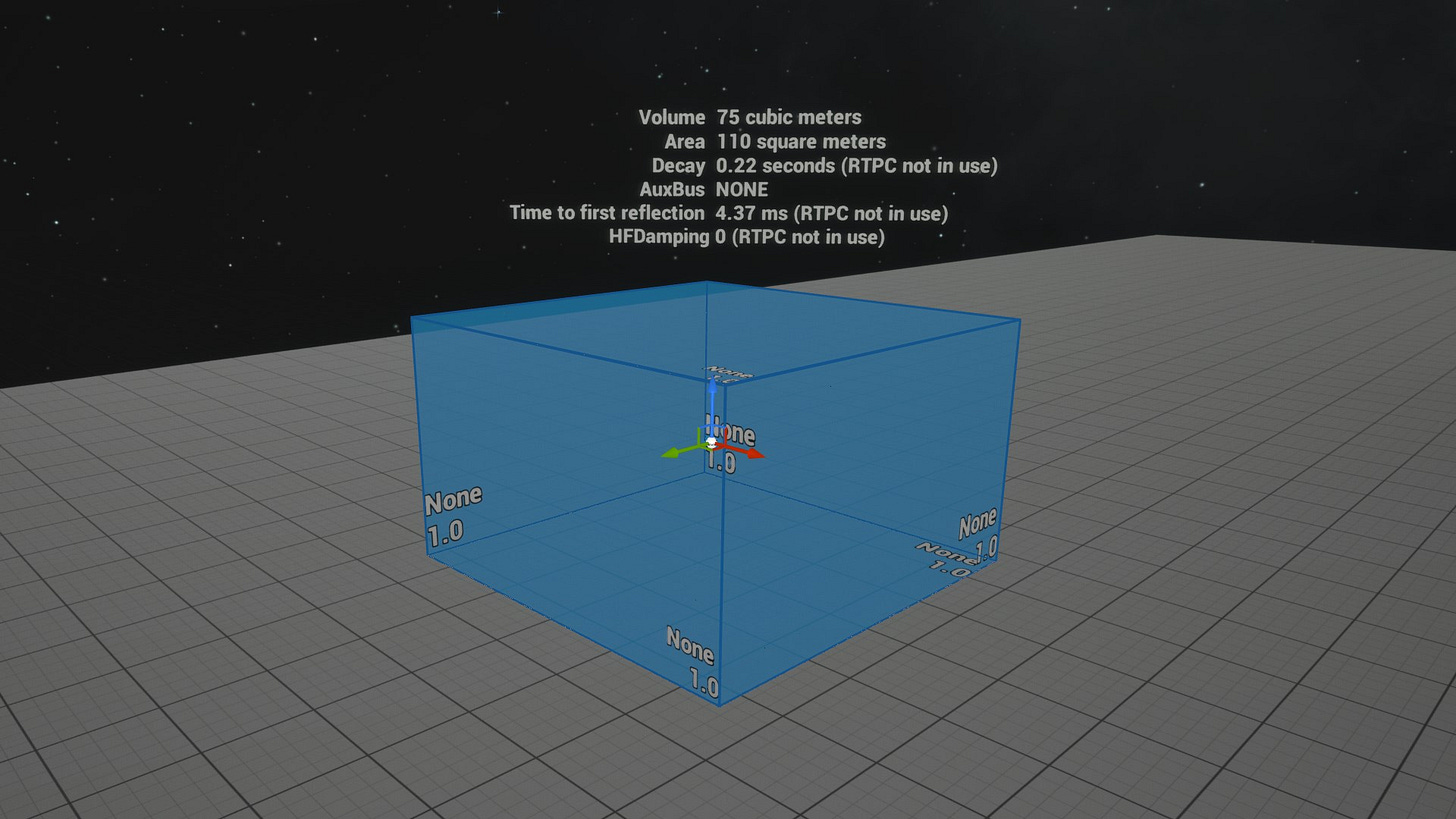

Wwise provides a number of tools for this, and groups some of them together using Volumes in a building block called a Spatial Audio Volume (AkSpatialAudioVolume).

Unreal note: A Volume in Unreal is an object that represents a box (or other shape) in 3D space, inside of certain coordinates. Their main job is to understand when things are inside them, and then take specific actions depending on what’s inside or outside, or entering or exiting the space. A simple example is a Trigger Volume, which fires an event to make something happen when you walk inside of it.

Spatial Audio Volumes and Acoustic Portals

A Spatial Audio Volume is a Volume that has a number of specialized components on it that let Wwise do stuff and make calculations. Spatial Audio Volumes are used to divide the world up into smaller chunks, to both define behaviors of sound within them and how it travels between them. Without them, the engine would have to evaluate every sound in the game world individually to see if you can hear it.

A Spatial Audio Volume provides three functions, which can all be provided separately if their components are attached to any Volume:

A Surface Reflector Set component, to reflect and transmit sound at the edges.

A Room component, to contain and group sounds together.

A Late Reverb component, to calculate and route reverb to other buses.

Surface Reflector Sets

Wwise keeps its own internal 3D geometry of the shape of your levels to track how sound should travel through and bounce off objects and the environment.

A Surface Reflector pushes an Actor’s geometry into Wwise so it can make calculations about what should happen when sound hits it.

Surface Reflector Sets in a Spatial Audio Volume automatically construct reflectors around the boundaries of the Volume so you can configure the behavior of the room as a unit. This allows you to adjust things like:

How much sound “leaks” through the edges of the room (Transmission Loss)

How sound bounces off the walls and is absorbed, reflected, etc. (Acoustic Materials)

Example: A room in your game that’s an airlock might have its Transmission Loss set to 1 (no transmission through walls) and have Materials that mostly absorb sound. A tiled bathroom might have moderate transmission loss because of its wall thickness, but its Materials (primarily tile) would reflect back most frequencies.

Unreal tip: If you want to make regular in-game objects participate in audio calculations (e.g. you want sound to be quieter when a player is behind cover), you can add an AkGeometry component to any other Actor.

At its core, a Surface Reflector Set is pure 3D geometry and nothing more. It defines audio boundaries in the game world and what happens when sounds interact with them. We don’t (usually) want to hear sounds from the entire game world. But in order to cull those world sounds so they’re not “playing” unnecessarily and consuming CPU, we need to figure out what we should hear in a given space. We also don’t want to be processing every sound in the game world separately, so we need an organizational concept that lets us group them together for more efficient culling or activation.

This is why we have Rooms and Portals.

Rooms And Portals

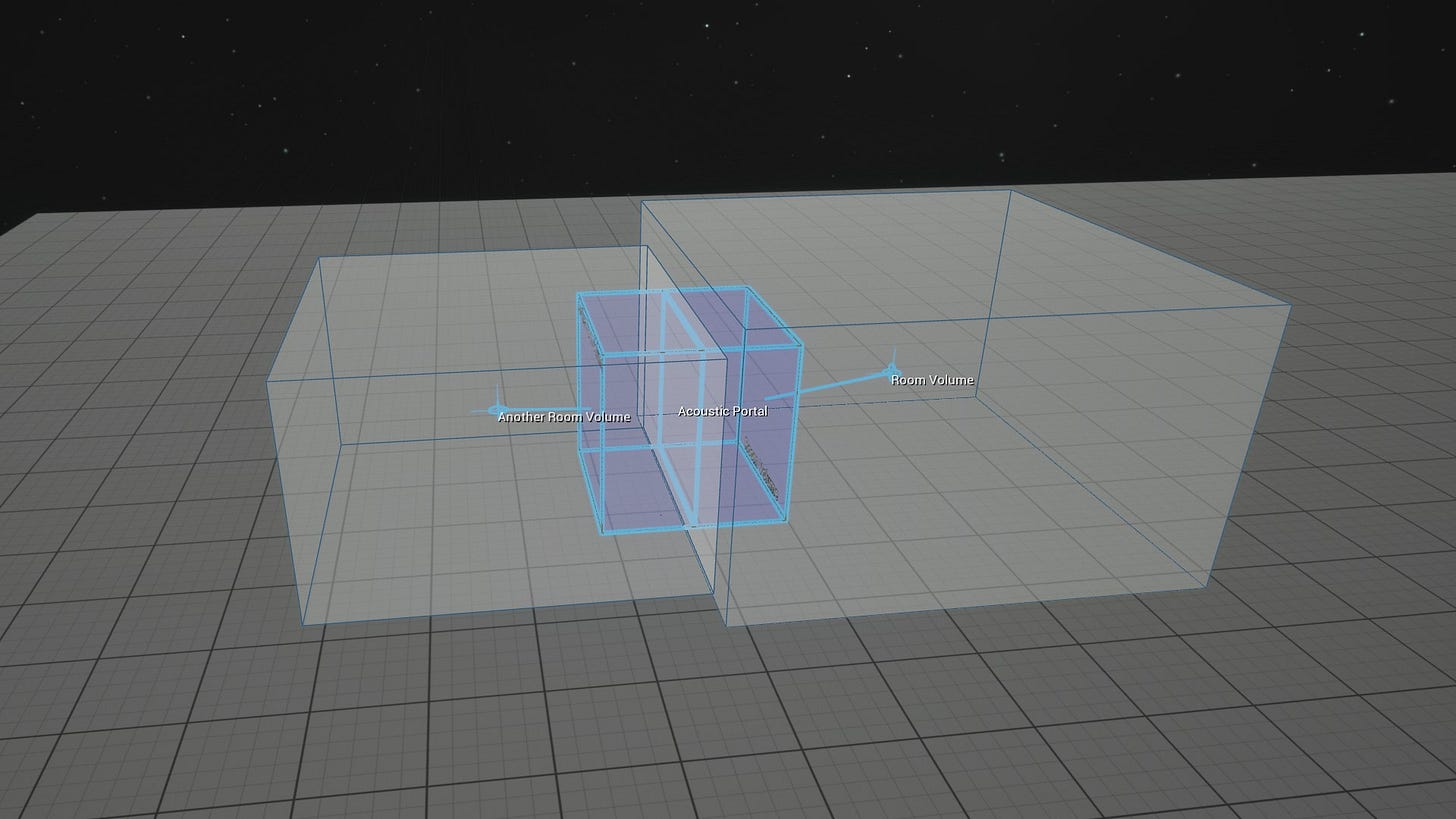

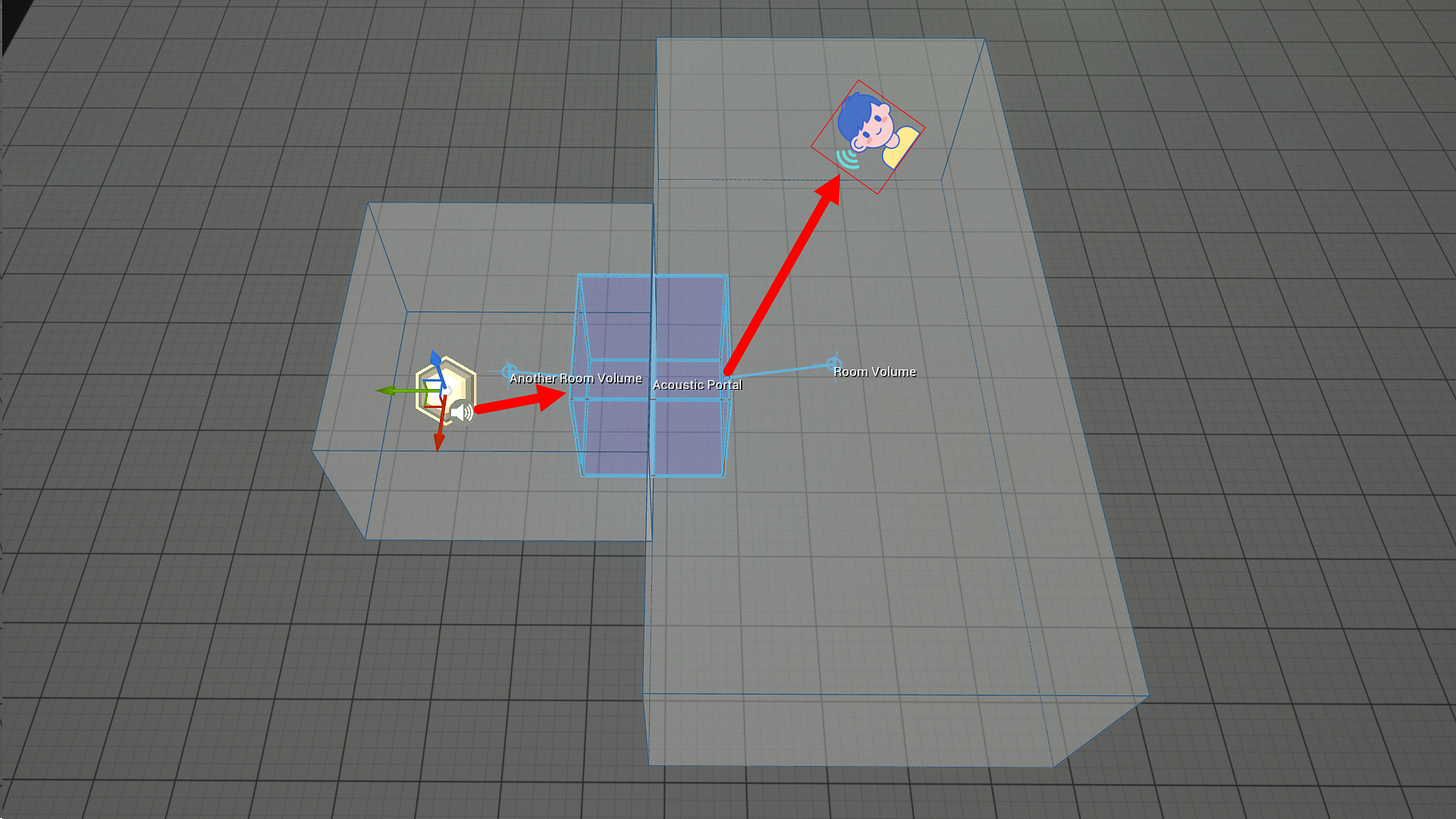

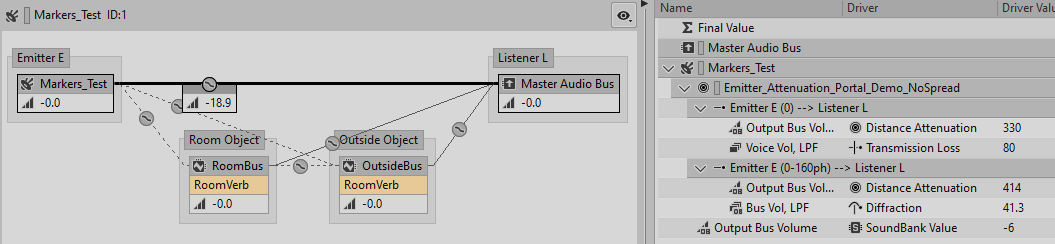

Internally, Wwise keeps an adjacency map of which Spatial Audio Volumes are connected to each other using Rooms and Portals. You can think of rooms and portals as links in a chain - a “propagation path” which will be walked and added up to calculate the total path between Emitter and Listener, so the Listener knows what the volume, balance, reverb, and other effects should be when the sound finally plays to the mix.

Rooms contain sounds, and they transmit sounds within them to other rooms via Portals.

Rooms

A Spatial Audio Volume “becomes” a room because it is a Volume that has a Room Component. A Portal is an entirely separate Actor (AkAcousticPortal) with an AkPortalComponent. You can create your own Room or Portal actors by adding an AkRoom or AkPortalComponent to any Volume.

Wwise uses the Room Component (AkRoom) to provide a few core behaviors:

Transmitting the sounds that occur inside it to other Rooms via Portals (or in the absence of a Surface Reflector Set, through its boundaries).

Defining oriented reverb within a space. (more on that later)

Portals

A Portal acts as a bridge between two rooms, and alters the values to be applied to a sound for the next room. In this example, the Portal lets the sound in Room 1 (left, r1) be heard in Room 2 (right, r2). The sound will appear in Room 2 (r2) from the location of the Portal.

The process of a sound traveling between Rooms goes something like this:

An Emitter plays a sound within Room 1 (r1).

The distance (and other acoustic properties) between the Emitter and the Portal (p1) is calculated.

If the sound would be “audible” to the Portal (p1) based on how far it’s allowed to travel, the Portal “hears” the sound.

The Portal (p1) adjusts the sound’s location to be coming its own location as it enters Room 2 (p2) with its new values, and optionally, lowers its attenuation if it is partially closed.

The Listener on the player receives the sound, calculates the total attenuation and balance from the chain, and the player hears the sound as coming from the Portal (p1) in the second Room (r2).

Optimization

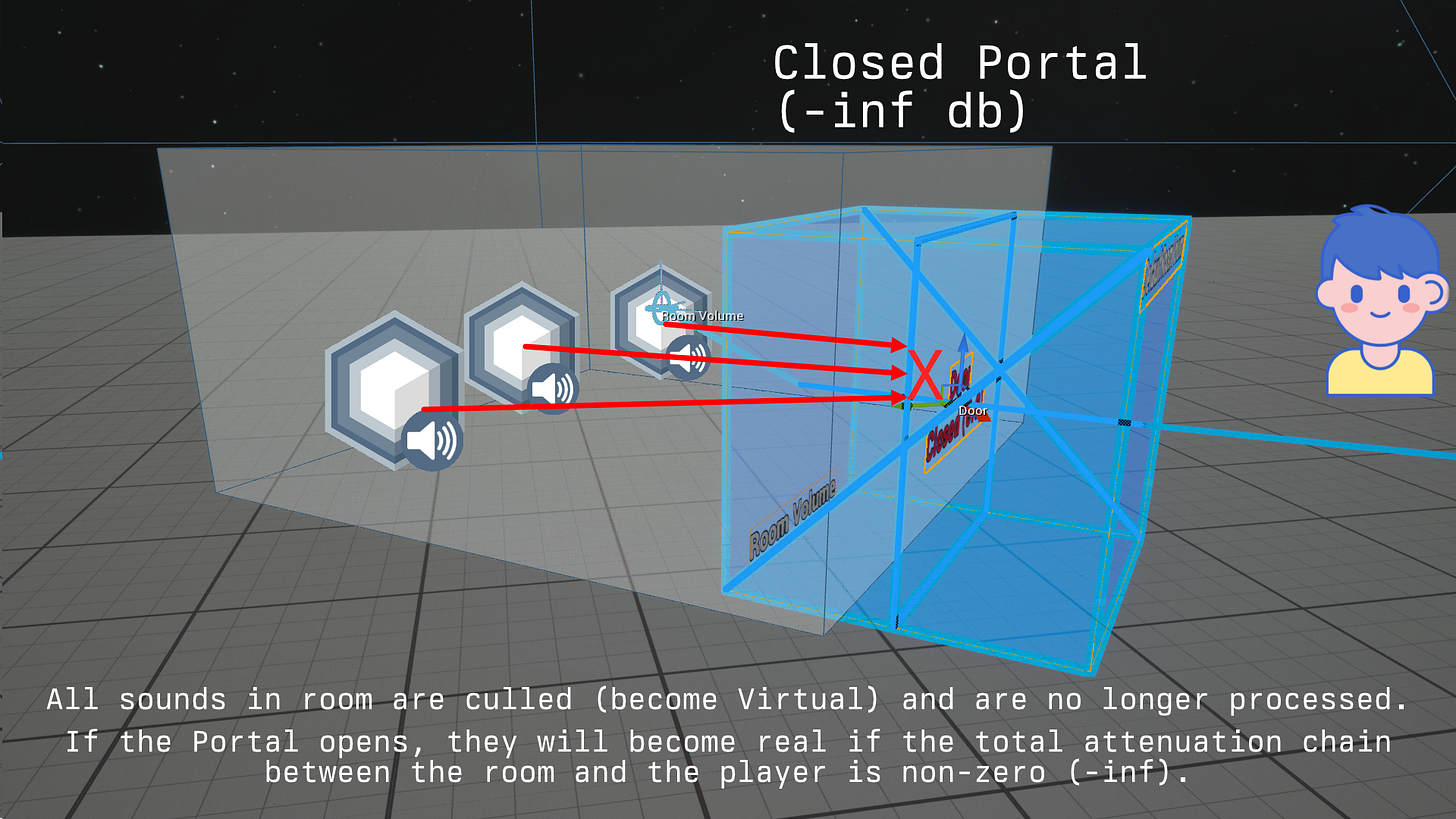

Now let’s take a look at what happens when a Portal is closed.

In the image above, the engine knows there are three sounds in the first Room (r1). It also knows sound does not leak through the walls. It knows that the Portal (p1) between Room 1 (r1) and the Room the player is in (r2) is closed, so it determines not to play those sounds. The engine is able to temporarily ignore anything in Room 1 (r1) by evaluating just the state of Room 1 (r1) (no wall transmission) and the Portal (p1) (closed).

By evaluating these choke points in our tree of connected Rooms and Portals (propagation path), we can cull entire areas of the map from audio that we know aren’t relevant — and stop any processing on them — with math alone.

Ambient Sounds

We talked about sound emitting from locations and about sound distance from Portals, but what if we don’t want sounds to play from a specific location? What if we want the quiet hum of an air conditioner to play gently anywhere in a Room? This is called “Ambient Sound” and it’s treated a special way inside Rooms.

Usually, an Emitter (AkComponent) has a specific position in 3D space that a sound plays from, and its volume and balance are calculated for the Listener relative to that location.

Ambient sounds played on a Room play at a consistent volume and balance no matter where you are in the Room. Attenuation profiles on a sound playing inside a room will not apply until that sound exits the room via a Portal. After it exits via a portal, it behaves as in the earlier example - a Portal (p1) that “hears” an ambient sound in one room (r1) will then rebroadcast that sound with itself as the origin point. So, if you’re standing in the adjacent room (r2), you will hear the ambient sound as coming from the Portal (p1), as the volume and balance to the Listener on the player/camera calculate it from the location of the Portal.

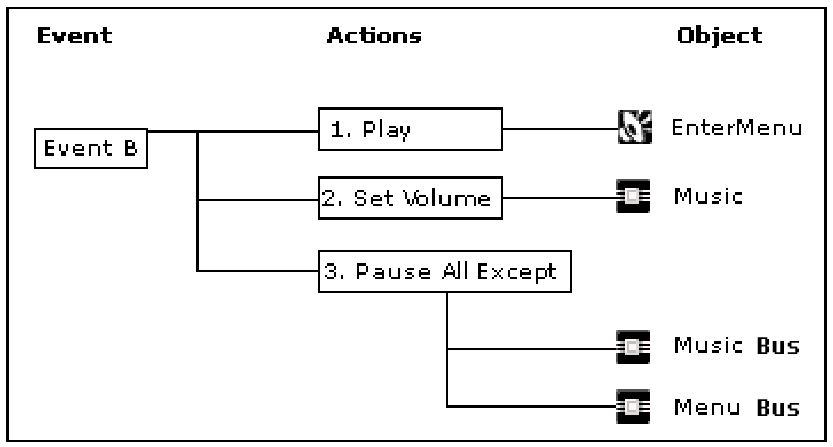

Wwise note: In Wwise, you don’t actually trigger sounds directly — you post an Event which references the sound and tells it how to play. Events can be used to start or stop specific sounds, play loops, or trigger other arbitrary actions.

If you want a sound to play ambiently in a room, there’s a field in the AkRoom for an AkEvent which triggers playing a sound. Optionally, there’s a checkbox for “auto-post” which means it will post the event when the game starts (on BeginPlay).

Unreal/Wwise note: Events must be posted to a Game Object, which is just a way of recording different things Wwise knows about in your game. If the Actor in Unreal has an AkComponent capable of playing the sound, it will use that as the Game Object source. If the Actor does not have one, one will be created dynamically for playback.

Important: If you want to play a sound ambiently later on, you need to post the event to the AkRoom component, NOT the AkSpatialAudioVolume actor. Posting it to the Actor makes it look for an AkComponent to play from, and since an AkRoom is not an AkComponent it will create AkComponent in the middle of the volume and play sound from there spatially instead of ambiently. You almost certainly do not want this!

Ambient Spaces Outdoors

If you want a particular space to have an ambient sound in and around it, there’s an option in the AkRoom to make it a “Reverb Volume”, which changes the rules a little. The sound will play ambiently inside the space, but emit outwards in all directions from the room without connecting Portals to do so. This is useful for outdoor spaces where you want an area to have some particular sounds (like birds coming from non-specific trees in one area in the park), and also want that to tail off smoothly as you walk away.

Unreal note: The Wwise plugin provides an AkReverbVolume actor for things like this. It is basically the same as an AkSpatialAudioVolume but with presets optimized for reverb volumes and the surface reflector set disabled.

Other Rules About Rooms

Rooms have a priority value to allow you to nest Rooms inside of other Rooms (highest wins). For example: You might have one Room volume for the entire inside of a warehouse, with another Portal and Room inside it in the corner representing the inside of an office.

If a sound is played and it’s not inside a Room, it will fall back to the default “Outdoors” Room.

Late Reverb

The final feature of a Spatial Audio Volume is Late Reverb, which is mostly beyond the scope of this article. It lets you calculate reverbs and echoes that occur within the Volume, and send the resulting sounds to a separate bus output so you can control how and when it’s heard. This is useful during the later stages of tuning how your level sounds.

The Core Lesson

The thing to really get across here is that we are defining — in software — a logical chain of objects and their properties between an Emitter and the Player. Traversing this list allows the sound engine to:

Determine if a sound can be heard, and do nothing if it can’t.

Build a list of property changes and effects to be applied to the sound when it is finally played.

The sound is never actually played unless Wwise logically determines that it can be heard by a Listener that can play it to an output. All we are ever doing is feeding the sound engine the information it needs to make those calculations and apply the appropriate changes/effects during final playback.

Unreal Audio Tips

Here’s some other stuff you might find useful as you start to get into it:

If you want to play a sound from a particular Actor, post the Play Event to the Actor.

If the Actor has a child

AkComponent, it will play back from the first one found.If there is no existing

AkComponent, one will be created on the Actor’s root component.

If you want to play a sound from a particular Actor Component, post the Play Event to the Component. An

AkComponentwill be created if one does not exist as a child of that Component.If you have a reference to a specific

AkComponentthat you want to play sound from, you can post the event directly to thatAkComponent.Destroying an actor that has a sound playing from a child

AkComponentwill stop any sound currently playing from it, because the Game Object Id playing back the sound no longer exists.You don’t actually need one

AkComponentfor every possible place you might need to play sound from. There is nothing stopping you from simply moving oneAkComponentaround programmatically instead of having one at every location.For example: If you have a large segment of electrical wire that you want to emit an electrical “buzz”, instead of placing Emitters all the way along the cable, you might have three Emitters that move along it with the player so that the whole thing appears to be buzzing.

You can also just play a sound at any random location using the Post Event At Location. This plays from a temporary Wwise Game Object which is immediately destroyed, and no

AkComponentis created because there is no parent actor. This is a cheap way of playing a lot of short-lived sounds with little overhead.

Unfortunately, It Gets Way More Complicated

You now have the basics on how to play sound and how to structure your levels to get approximately the result you want. The issue, however, is that in the real world, sound is far more complicated. Ever thought about how sound reflects more off tiles than it does wooden walls? Or how the bass from a car stereo travels further than treble? What about how a car backfiring in the city can echo back several times as it hits different buildings?

There are so many things you can simulate in order to make things sound “real”.

The simplest is diffraction — how sound moves around the edges of objects in the audio geometry. It too can be calculated with math.

What about moving through large environments like a city, where different buildings and obstacles are meaningful distances from your sound source, like a gunshot? Sound takes time to travel, so the reflections of that sound are going to arrive back at different times and with different properties.

If you have a system that simulates and adds rain noise when you’re outside, it won’t be enough simply to add an ambient “rain” sound to the outside volume. If your game has objects that you can shelter under (e.g. bridges, bus stops), you may also need to be checking line of sight to the sky every frame, then use Real Time Parameter Controls to adjust the properties of the rain sound to dampen them.

Do you have plants that would absorb sound in your level, like the indoor park in Prey (2018)? Can you fit them all in the geometry that you send to the sound engine with the amount of RAM you have available? Will you need to use simplified geometry for sound purposes? How will that be stored?

If you’re doing something sufficiently complex, you’re going to run into the limits of your hardware way faster than you expect. If you just place an AkComponent on everything that could ever make sound, you’re going to run out of memory and CPU fast. You may need to start writing your own code to optimize, cull, emulate, and manage what you can afford to keep in memory and executing in CPU.

If you want to dive deeper into just how wild game audio can get, I recommend checking out the GDC talk by David Osternacher and Alex Riviere, Everything is Connected: Ambient Sound in 'Avatar: Frontiers of Pandora'. They have thousands of emitters making sound throughout a forest, and the level of detail is quite frankly stunning.

And if you haven’t gone completely insane by the end of all this (or you don’t have access to the GDC Vault), check out Robert Bantin’s talk Finding Space for Sound: Acoustics in 'Avatar: Frontiers of Pandora', which digs into techniques for creating realistic, immersive in-game sound through reverb, diffraction, and a tonne of other things you can simulate.

The rabbit hole of game audio is as deep as you decide is important for your game.

Summary

We’ve covered a lot here, but it should be enough for you to at least get started using Wwise with Unreal.

To recap, here’s a quick hit list of the most important stuff:

Sounds play by having an Event posted to a game object.

Sound play notifications come from Emitters and are received by Listeners that play the actual sound.

Wwise calculates how sound travels between the Emitter and the Listener using math, instead of simulating the reality of sound.

The path can be affected by geometry that the sound engine has been informed about by

AkGeometry, Surface Reflectors, Spot Reflectors, or Surface Reflector Sets.Rooms organize and group sounds together to make it more efficient to determine what the player can hear and unload / not process anything that they can’t.

Rooms can contain Emitters and also be Emitters for ambient sound.

Portals create a propagation path so they can bridge sound between rooms.

Posting an Event to an

AkRoomComponentwill make it play ambiently inside the room.

Until next time.

// for those we have lost

// for those we can yet save